Meagan Futrell • Acoustic

How To Record Acoustic Drums

Marnia Trusty • Acoustic

How To Fix Acoustic Guitar Intonation

Rubi Swick • Acoustic

Which Acoustic Property Makes All Different Instruments And Voices Sound Unique

Inspiration & Ideas

Featured Articles

Dorene Gendron • Earbuds

How To Pair Beats Pro Earbuds

Agnese Almodovar • Earbuds

How To Pair JBL Wireless Earbuds

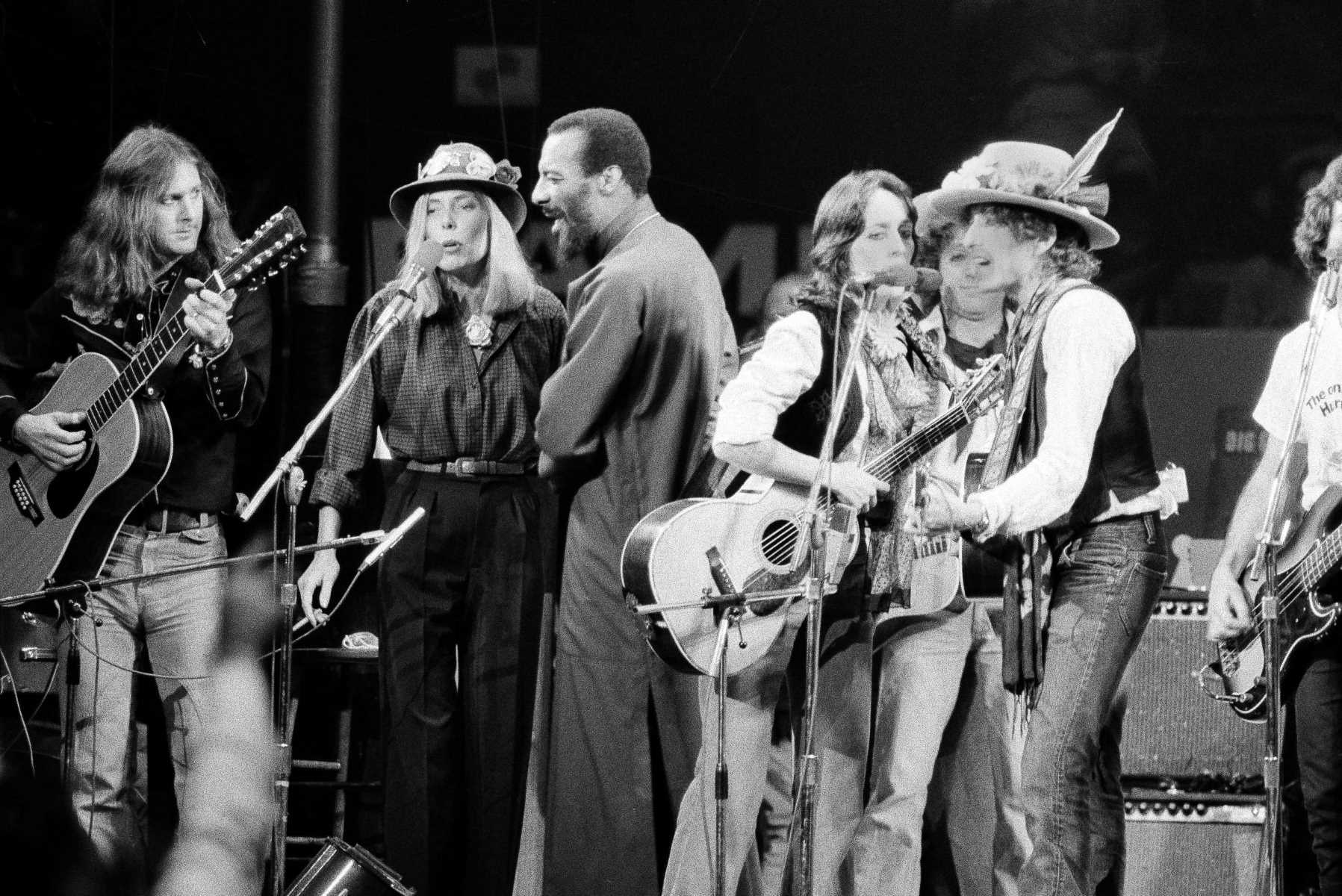

Steffie Ewell • Featured

What Is Folk Music

Gayla Nathan • Featured

How Old Is Cardi B On Love And Hip Hop

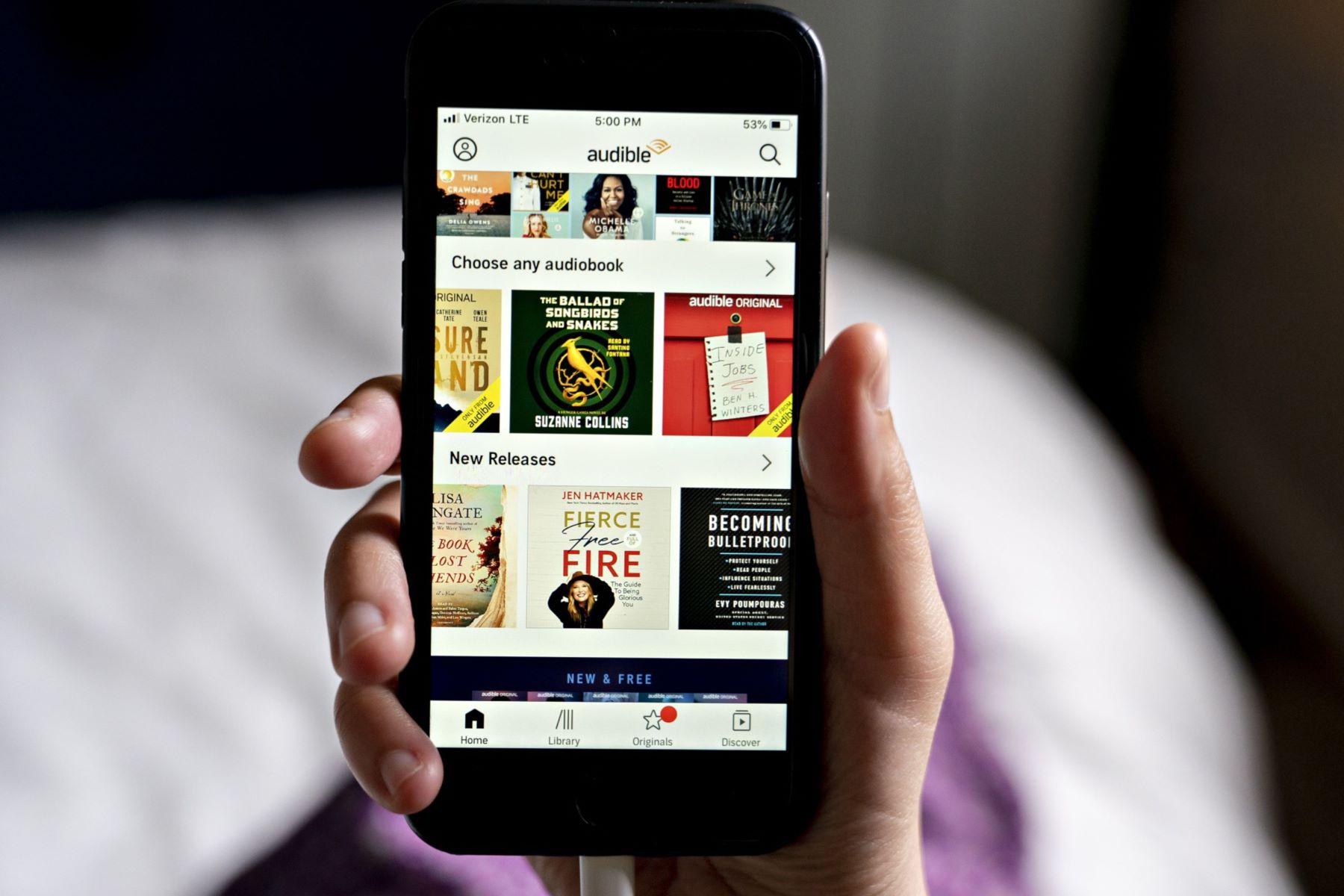

Donny Millsap • Audiobook

How Do I Return An Audiobook On Audible

Irma Leon • Featured